Online hate thrives globally through self-organized, scalable clusters that interconnect to form resilient networks spread across multiple social media platforms, countries and languages, according to new research published today in the journal Nature. Researchers at the George Washington University developed a mapping model, the first of its kind, to track how these online hate clusters thrive. They believe it could help social media platforms and law enforcement in the battle against hate online.

With the explosion of social media, individuals are able to connect with other likeminded people in a matter of a few clicks. Clusters of those with common interests form readily and easily. Recently, online hate ideologies and extremist narratives have been linked to a surge in crimes around the world. To thwart this, researchers led by Neil Johnson, a professor of physics at GW, set out to better understand how online hate evolves and if it can be stopped.

“Hate destroys lives, not only as we’ve seen in El Paso, Orlando and New Zealand, but psychologically through online bullying and rhetoric,” Dr. Johnson said.

“We set out to get to the bottom of online hate by looking at why it is so resilient and how it can be better tackled. Instead of love being in the air, we found hate is in the ether.”

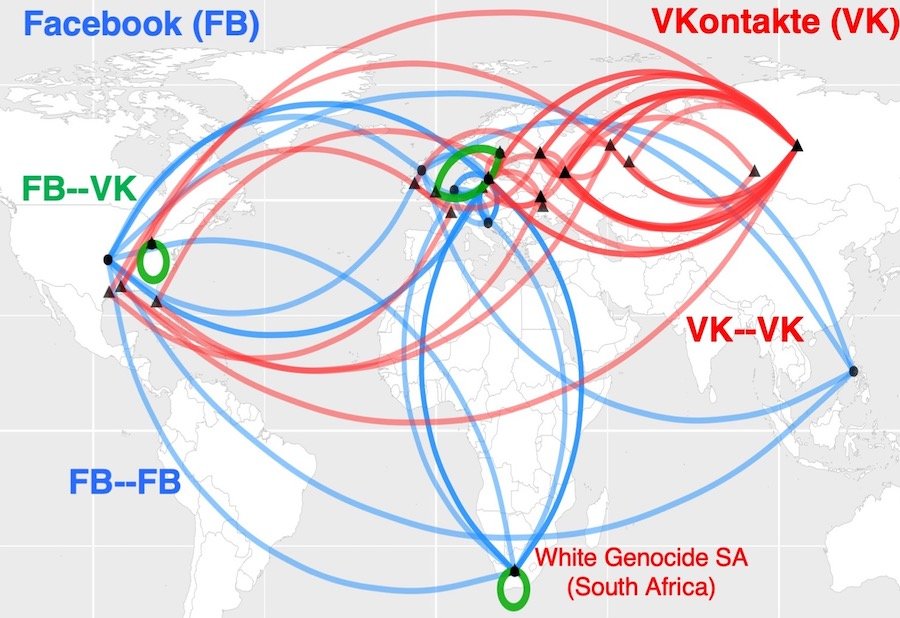

To understand how hate evolves online, the team began by mapping how clusters interconnect to spread their narratives and attract new recruits. Focusing on social media platforms Facebook and its central European counterpart, VKontakte, the researchers started with a given hate cluster and looked outward to find a second one that was strongly connected to the original. They discovered that hate crosses boundaries of specific internet platforms, including Instagram, Snapchat and WhatsApp; geographic location, including the United States, South Africa and parts of Europe; and languages, including English and Russian.

The researchers saw clusters creating new adaptation strategies in order to regroup on other platforms and/or reenter a platform after being banned. For example, clusters can migrate and reconstitute on other platforms or use different languages to avoid detection. This allows the cluster to quickly bring back thousands of supporters to a platform on which they have been banned and highlights the need for crossplatform cooperation to limit online hate groups.

[ad_336]

“The analogy is no matter how much weed killer you place in a yard, the problem will come back, potentially more aggressively. In the online world, all yards in the neighborhood are interconnected in a highly complex way—almost like wormholes. This is why individual social media platforms like Facebook need new analysis such as ours to figure out new approaches to push them ahead of the curve,” Dr. Johnson said.

The team, which included researchers at the University of Miami, used insights from its online hate mapping to develop four intervention strategies that social media platforms could immediately implement based on situational circumstances:

- Reduce the power and number of large clusters by banning the smaller clusters that feed into them.

- Attack the Achilles’ heel of online hate groups by randomly banning a small fraction of individual users in order to make the global cluster network fall a part.

- Pit large clusters against each other by helping anti-hate clusters find and engage directly with hate clusters.

- Set up intermediary clusters that engage hate groups to help bring out the differences in ideologies between them and make them begin to question their stance.

The researchers noted each of their strategies can be adopted on a global scale and simultaneously across all platforms without having to share the sensitive information of individual users or commercial secrets, which has been a stumbling block before.

[rand_post]

Using this map and its mathematical modeling as a foundation, Dr. Johnson and his team are developing software that could help regulators and enforcement agencies implement new interventions.

Dr. Johnson is affiliated with the GW Institute for Data, Democracy, and Politics, which fights the rise of distorted and misleading information online, working to educate national policymakers and journalists on strategies to grapple with the threat to democracy posed by digital propaganda and deception. This research was supported in part by a grant from the U.S. Air Force (FA9550-16-1-0247).