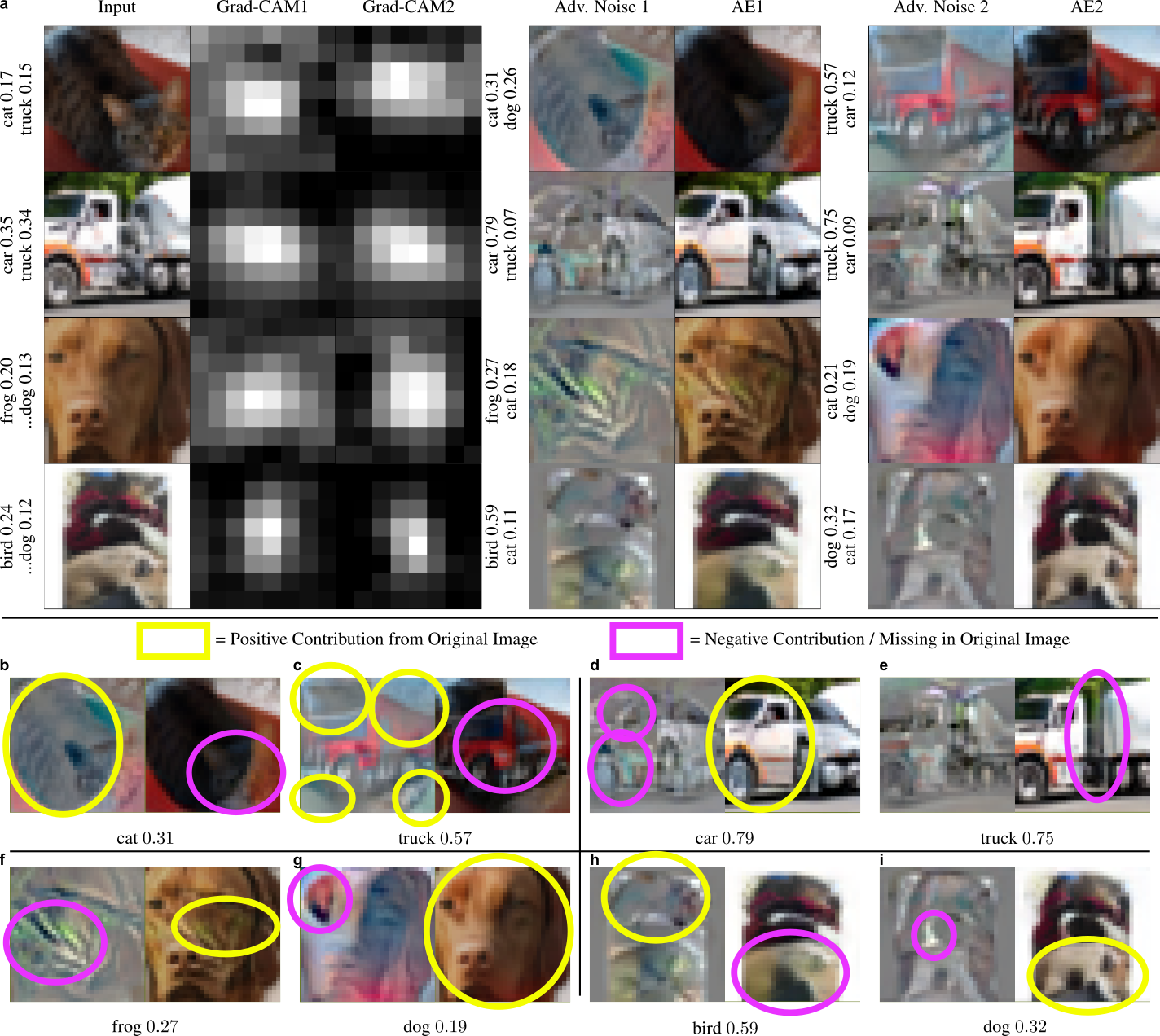

Like humans, Machine Learning (ML)-based systems sometimes make the wrong decision. However, while humans are able to reason about and explain their decisions, ML-based systems do not have a means of reliably informing users of the reasoning behind a decision. This fault is exacerbated by the instability of ML-based systems: they can yield a completely different decision when their input is modified even an imperceptible amount. Adversarial Explanations are a step towards addressing both of these problems, by providing new means of stabilizing a network’s output, and by leveraging that newfound stability to provide explanations more intuitive and reliable than previously possible.

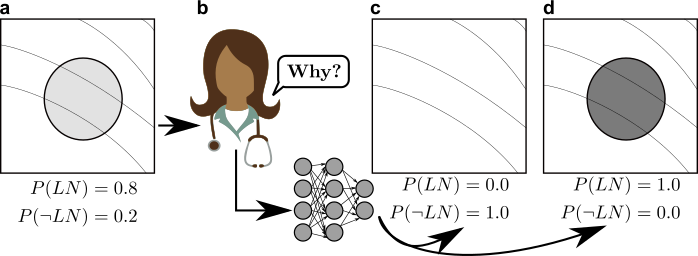

For sensitive problems, such as medical imaging or fraud detection, neural network (NN) adoption has been slow due to concerns about their reliability.

NNs have also been found to be vulnerable to a class of imperceptible attacks, called adversarial examples, which arbitrarily alter the output of the network.

In new study researchers demonstrate both that these attacks can invalidate previous attempts to explain the decisions of NNs, and that with very robust networks, the attacks themselves may be leveraged as explanations with greater fidelity to the model. The article, published in Nature Machine Intelligence, also shows that the introduction of a novel regularization technique inspired by the Lipschitz constraint, alongside other proposed improvements including a half-Huber activation function, greatly improves the resistance of NNs to adversarial examples.

[rand_post]

On the ImageNet classification task, science team demonstrates a network with an accuracy-robustness area (ARA) of 0.0053, an ARA 2.4 times greater than the previous state-of-the-art value. Improving the mechanisms by which NN decisions are understood is an important direction for both establishing trust in sensitive domains and learning more about the stimuli to which NNs respond.